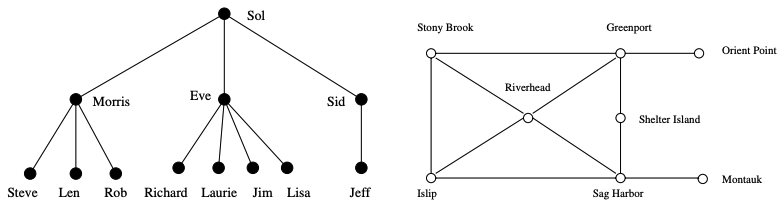

Modeling real-world structures with trees and graphs

Modeling real-world structures with trees and graphsPet Peeve: The author sometimes skips steps without an explaination (like the integer truncation in "stop and think: incremental correctness"). Some examples are hard to follow for an international student (like understanding the lottery system in "war story: pschic modeling").

A computational problem is a specification of a desired input-output relationship. e.g.

Computational problem: Sorting

Input: A sequence of values , ..., .

Output: The permutation (reordering) of the input sequence such that ... .

An instance of a problem is all the inputs needed to compute a solution to the problem. Alternatively, a computational problem is the set of all (problem) instances and the desired output. e.g.

To sort the permutation

{8, 3, 6, 7}is an instance of the general sorting problem and{3, 6, 7, 8}is the desired output.

An algorithm is a well-defined computational procedure that halts with a desired output when given any instance of the given computational problem. An algorithm is an abstract (idea) and can have multiple implementations (programs). e.g.

/*

Insertion sort is an algorithm to the sorting problem: Start with a single

element (thus forming a trivially sorted list) and then incrementally inserts

the remaining elements so that the list stays sorted.

*/

insertion_sort(item s[], int n)

{

int i,j; /* counters */

for (i=0; i<n; i++) {

j=i;

while ((j>0) && (s[j] < s[j-1])) {

swap(&s[j],&s[j-1]);

j = j-1;

}

}

}

Real-world applications involve real-world objects. However, most algorithms are designed to work on rigorously defined abstract structures. Modeling is the art of formulating your application in terms of procedures on such fundamental abstract structures so as to exploit the existing algorithms literature.

Determining that you are dealing with an instance of a general problem allows you to solve it using general well-known algorithms.

Certain problems can be modeled in several different ways, some much better than others. Modeling is only the first step in designing an algorithm for a problem. Be alert but not dismissive for how the details of your applications differ from a candidate model.

{1,4,3,2} and {4,3,2,1} are two distinct permutations of the same set of four integers.{1,3,4} and {2} are two distinct subsets of the first four integers. Order does not matter in subsets the way it does with permutations. Modeling real-world structures with trees and graphs

Modeling real-world structures with trees and graphs

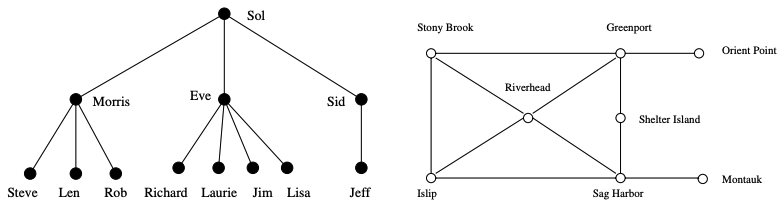

Learning to think recursively is learning to look for big things that are made from smaller things of exactly the same type as the big thing.

If you think of houses as sets of rooms, then adding or deleting a room still leaves a house behind.

n things {1, ..., n} and you get a permutation of the remaining n-1 things. Basis case: {}{1, ..., n} contains a subset of (1, ..., n - 1) obtained by deleting element n. Basis case: {}Recursive descriptions of objects require both decomposition rules and basis cases, namely the specification of the smallest and simplest objects where the decomposition stops.

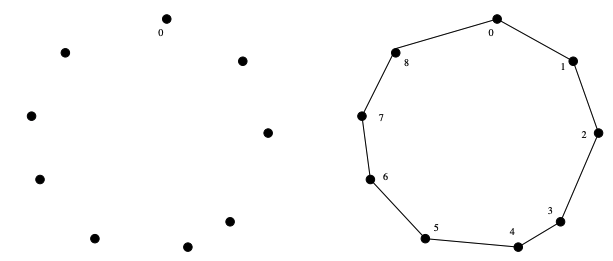

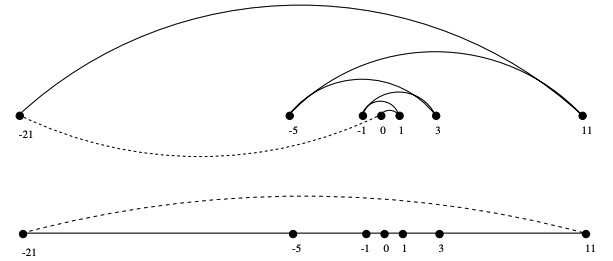

Recursive decompositions of combinatorial objects. (left column) Permutations, subsets, trees, and graphs. (right column) Point sets, polygons, and strings

Recursive decompositions of combinatorial objects. (left column) Permutations, subsets, trees, and graphs. (right column) Point sets, polygons, and strings

An algorithmic problem is specified by describing the complete set of instances it must work on and of its output after running on one of these instances.

There is a distinction between a general problem and an instance of a problem. E.g:

Problem: Sorting

Input: A sequence ofnkeys a1,...,an.

Output: The permutation (reordering) of the input sequence such that: a′1 ≤ a′2 ≤ ··· ≤ a′n−1 ≤ a′n.

Instance of sorting problem: { Mike, Bob, Sally}; { 154, 245 }

An algorithm is a procedure that takes any of the possible input instances and transforms it to the desired output.

Three desirable properties for a good algorithm:

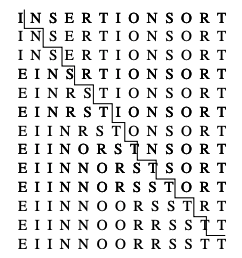

Insertion sort is an algorithm to the sorting problem: English description:

Start with a single element (thus forming a trivially sorted list) and then incrementally inserts the remaining elements so that the list stays sorted.

Pseudocode:

array = input sequence

n = array size

i = 0

while i < n

j = i

while j > 0 AND array[j] < array[j - 1]

swap element at `j` with element at `j - 1` in array

decrement j by 1

increment i by 1

Code:

An animation of the logical flow of this algorithm on a particular instance (the letters in the word “INSERTIONSORT”)

Problem: Robot Tour Optimization (aka: Traveling Salesman Problem [TSP]).

Input: A setSofnpoints in the plane.

Output: What is the shortest cycle tour that visits each point in the setS?

Starting from some point

p0, we walk first to its nearest neighborp1Fromp1, we walk to its nearest unvisited neighbor. Repeat this process until we run out of unvisited points After which we return top0to close off the tour.

NearestNeighbor(P)

Pick and visit an initial point p₀ from P

p = p₀

i = 0

While there are still unvisited points

i = i + 1

Select pᵢ to be the closest unvisited point to pᵢ₋₁

Visit pᵢ

Return to p₀ from pₙ₋₁

Repeatedly connect the closest pair of endpoints whose connection will not create a problem, such as premature termination of the cycle. Each vertex begins as its own single vertex chain. After merging everything together, we will end up with a single chain containing all the points in it. Connecting the final two endpoints gives us a cycle. At any step during the execution of this closest-pair heuristic, we will have a set of single vertices and vertex-disjoint chains available to merge.

Correct algorithms usually come with a proof of correctness, which is an explanation of why we know that the algorithm must take every instance of the problem to the desired result.

proof.QED at the bottom to denote that you have finished, representing the Latin phrase for "thus it is demonstrated."n (that is, an integer n ≥ 0) holds for all values of n. The proof consists of two steps:n = k, and prove that the statement holds for n = k + 1.n = k, is called the induction/inductive hypothesis. You're doing a thought experiment of what would happen if it was true for n = k. It might be clearer to use the phrase "suppose true when n = k" rather than "assume true when n = k" to emphasise this.n = k and then uses this assumption to prove that the statement holds for n = k + 1. We try to manipulate the statement for n = k + 1 so that it involves the case for n = k (which we assumed to be true).n - 1 elements of array A are completely sorted after n - 1 iterations of insertion sort.x to A, we find where it goes, namely the unique

spot between the biggest element less than or equal to x and the smallest element greater than x. This is done by moving all the greater elements

back by one position, creating room for x in the desired location. ■m, which can be listed as p₁,..., pₘ. So let's assume this is the case and work with it.N has the property that it is divisible by each and every one of the known primes, because of how it was built.N + 1. It can't be divisible by p₁ = 2, because N is.p₂ = 3 and every other listed prime. Since a +1 can’t be evenly split by any of the prime numbers because they are bigger.N + 1 doesn't have any non-trivial factor, this means it must be prime.m prime numbers, none of which are N + 1, because N + 1 > m.Show that a + b can be less than min(a, b).

When:

a and b < 0(i.e: negative)

a <= b

Then:

min(a, b) = a

a + b < a

Example:

min(-6, -5) = -6

-6 + -5 = -6 -5 = -11

-11 < -6

Show that a × b can be less than min(a, b).

When:

a < 0(i.e: negative)

b > 0(i.e: positive)

Then:

min(a, b) = a

a * b < a

Example:

min(-3, 4) = -3

-3 * 4 = -12

-12 < -3

Design/draw a road network with two points a and b such that the fastest route between a and b is not the shortest route.

a──────c──────b

│ │

│ │

└────d────────┘

Route `acb` have a toll gate, is in development and have a speed limit.

Route `adb` is longer, but has none of these impediment.

Route `adb` will be faster than the shorter `acb` route.

a────┐ ┌────b

│ └─c─┘ │

│ │

└──────d──────┘

Route `acb` is the shortest but has 4 turns.

Route `adb` is the longest and has only 2 turns.

The analysis of algorithms is the process of finding the computational complexity of algorithms.

For an ordered list of size ($33$ in the example above), binary search takes at most check steps ($5$ steps in the example above); while linear search takes at most check steps ($28$ steps in the example above).

The computational complexity or simply complexity of an algorithm is the amount of resources required to run it.

Common types of resources include:

The computational complexity of an algorithm can be measured only when given a model of computation.

The most commonly used model of computation is the Random Access Machine (RAM) which is a hypothetical computation model where:

+, *, -, =, if, call, etc) takes exactly unit-time (i.e. 1 step).The RAM is a simple model of how computers perform. It allows the analysis of algorithms in a machine-independent way by assuming that simple operations take a constant amount of time on a given computer and change only by a constant factor when run on a different computer.

It doesn’t capture the full complexity of real computers (e.g. multiplying two numbers takes more time than adding two numbers on most processors). However, the RAM is still an excellent model for understanding how an algorithm will perform on a real computer.

A model is a simplification or approximation of reality and hence will not reflect all of reality. […] "all models are wrong, but some are useful." — Model Selection and Multimodel Inference

Under the RAM model, we measure the computational complexity by counting the number of simple operations (also called steps) an algorithm takes on a given problem instance.

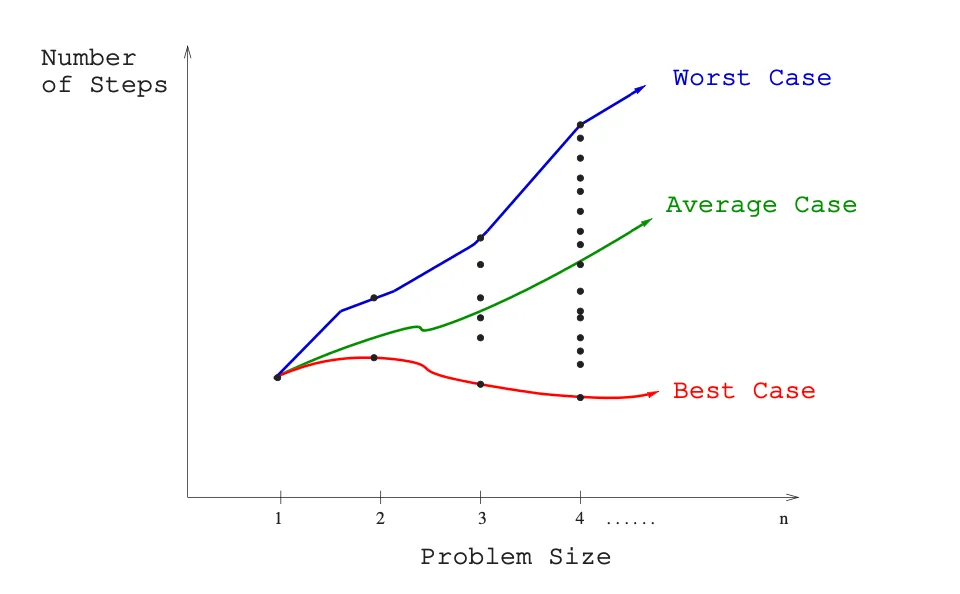

As the amount of resources required to run an algorithm generally varies with the size of the input, the complexity is typically expressed as a function , where is the size of the input and is the computational complexity.

Aside: We can also use any other characteristic of the input influencing the computational complexity.

However, the complexity of an algorithm may vary dramatically for different inputs of the same size. Therefore, three complexity functions are commonly used:

There are three problems with the complexity functions above:

Complexity theory seeks to quantify the intrinsic time requirements of algorithms (independent of any external factors), that is, the basic time constraints an algorithm would place on any computer .

For these reasons, one generally focuses on the behavior of the complexity for large , that is on its asymptotic behavior when tends to infinity. Therefore, the complexity is generally expressed by using big O notation.

Big O notation is a mathematical notation that describes the limiting behavior of a function when the argument tends towards infinity. The formal definition of the big O notations are:

Notation:

Meaning: There exists some constant such that for large enough (i.e. for all , for some constant $n_0$).

Notation:

Meaning: Means there exists some constant such that for large enough (i.e. for all , for some constant $n_0$).

Notation:

Meaning: Means there exists some constants and such that and for large enough (i.e. for all , for some constant $n_0$).

The best/average/worst-case computational complexity is a function of the size of the input for a certain algorithm.

The , , and notations are used to describe the relationship between two functions.

Hence, each best/average/worst case computational complexity function has its corresponding , , and notation.

Conflating the big O notations with the computational complexity functions likely stems from the fact that in everyday use, “the big O of the worst-case computational complexity function” is used interchangeably with just “big O”, “time complexity”, “complexity”, etc.

There are two accepted abuses of notation in the computer science industry:

Abuse of the equal sign: Technically, the appropriate notation is , because the equal sign does not imply symmetry.

Abuse of the Big O notation: The big O notation is often used to describe an asymptotic tight bound where using big Theta notation would be more factually appropriate. Big O notation provides an upper bound, but it doesn't necessarily provide a tight upper bound. For example, is , but it is also , , etc. For contrast, the equation is only .

The function appearing within the is typically chosen to be as simple as possible, omitting constant factors and lower-order terms.

Constant functions:

Logarithmic functions:

Linear functions:

Superlinear functions:

Quadratic functions:

Cubic functions:

Exponential functions:

Factorial functions:

Addition:

Multiplication by a constant:

Multiplication by non-constant:

Transitivity:

// Selection sort repeatedly identifies the smallest remaining unsorted element

// and puts it at the end of the sorted portion of the array.

void selectionSort(int arr[], int n) {

int i, j, minimum_element_index;

// One by one move the boundary of the unsorted subarray

for (i = 0; i < n; i++) {

// Find the minimum element in the unsorted array

minimum_element_index = i; // Assume the current element is the minimum

for (j = i + 1; j < n; j++) {

// If we find a smaller element, update the minimum_element_index

if (arr[j] < arr[minimum_element_index]) {

minimum_element_index = j;

}

}

// Swap the found minimum element with the first element

swap(&arr[minimum_element_index], &arr[i]);

}

}

Analysis of the selection sort algorithm:

if statement is executed is:

A logarithm is an inverse exponential function:

Exponential functions grow at a fast rate while logarithmic functions grow at a slow rate. Logarithms arise in any process where things are repeatedly halved. e.g.

Logarithms and binary search:

Logarithms and trees:

Logarithms and bits:

A direct consequence of the product property is the power property: $ \log_b (a^c) = c \cdot \log_b (a) $

Since is a constant, this implies raising the logarithm’s argument to a power doesn't affect the growth rate: $ \log_b (a^c) = \Theta (\log_b (a)) $

Changing the base of to base-c simply involves multiplying by : $ \log_b (a) = \frac{\log_c (a)}{\log_c (b)} \

\log_c (a) = \log_b (a) \cdot \log_c (b) $

Since and are constants, this property implies that the base of a logarithm has no impact on the growth rate: $ \log_2 (1,000,000) = 19.9316\ \log_3 (1,000,000) = 12.5754\ \log_100 (1,000,000) = 3 $

Aside: In practice, an asymptotically inefficient algorithm may be more efficient for small input sizes. This is particularly used in hybrid algorithms, like Timsort, which use an asymptotically efficient algorithm (here merge sort, with time complexity $n \log n$), but switch to an asymptotically inefficient algorithm (here insertion sort, with time complexity $n^2$) for small data, as the simpler algorithm is faster on small data.

int in a programming language sense is a fixed-width data structure. An integer in a mathematical sense is an ADT. For most purposes the user can work with the abstraction rather than the concrete choice of representation, and can simply use the data as if the type were truly abstract. defines the time it takes to perform the $i$-th addition.

The first case of :

The second case of :

addition is performed.e.g. Given a dynamic array initialized with a capacity of :

| i | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |---|---|---|---|---|---|---|---|---|---|---| | | $1$ | $2$ | $3$ | $1$ | $5$ | $1$ | $1$ | $1$ | $9$ | $1$ | | Capacity | | | | | | | | | | |

e.g. Given :

We see that in both cases of the function, there is a . So summation from to results in:

The second operand represents the total cost of the copy operation that occurs on each resizing. For addition, this happens times. e.g.

| n | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |---|---|---|---|---|---|---|---|---|---|---| | | | 0lt;/span> | <span class="bad">$1lt;/span> | $1 | 2lt;/span> | $2 | | | 3lt;/span> | $3 | | | | | | | | | | | | |

The second operand is the partial sum of a geometric series, which has the formula:

Applying the formula to the second operand:

is the number to which must be raised to obtain . Raising that number then to results in . Hence, the last expression can be simplified to:

The summation can be re-expressed again as:

The is inconsequencial in the time analysis, hence the summation can be simplified into:

Interpretation: A sequence of

addoperations costs at most , hence eachaddin the sequence costs at most (constant time) on average, which is the amortized cost according to the aggregate method.

Conclusion: This proves that the amortized cost of insertion to a dynamic array with doubling is $O(1)$.

Pointers represent the address of a location in memory. Pointers are the connections that hold the nodes (i.e. elements) of linked data structures together.

In C:

*p denotes the item that is pointed to by pointer p&p denotes the address of (i.e. pointer to) a particular variable p.NULL pointer value is used to denote unassigned pointers.All linked data structures share these properties:

Example linked-list displaying these properties:

typedef struct list {

data_type data; /* Data field */

struct list *next; /* Pointer field */

} list;

The linked-list is the simplest linked structure.

Singly linked-list has a pointer to only the successor whereas a doubly linked-list has a pointer to both the predecessor and successor.

Searching for data x in a linked-list recursively:

list *search_list(list *my_list, data_type x) {

if (my_list == NULL) {

return(NULL);

}

// If `x` is in the list, it's either the first element or located in the rest of the list.

if (my_list->data == x) {

return(my_list);

} else {

return(search_list(my_list.next, x));

}

}

Inserting into a singly linked-list at the head:

list *insert_list(list **my_list, data_type x) {

list *p; /* temporary pointer */

p = malloc(sizeof(list));

p->data = x;

p->next = *my_list;

// `**my_list` denotes that `my_list` is a pointer to a pointer to a list node.

// This line copies `p` to the place pointed to by `my_list`,

// which is the external variable maintaining access to the head of the list.

*my_list = p;

}

Deletion from a list:

// Used to find the predecessor of the item to be deleted.

list *item_ahead(list *my_list, list *x) {

if ((my_list == NULL) || (my_list->next == NULL) {

return(NULL);

}

if ((my_list->next) == x) {

return(my_list);

} else {

return(item_ahead(my_list->next, x));

}

}

// This is called only if `x` exists in the list.

void *delete_list(list **my_list, list **x) {

list *p; /* element pointer */

list *pred; /* predecessor pointer */

p = *my_list;

pred = item_ahead(*my_list, *x);

// Given that we assume `x` exists in the list,

// `pred` is only null when the first element is the target.

if (pred == NULL) { /* splice out of list */

// Special case: resets the pointer to the head of the list

// when the first element is deleted.

*my_list = p->next;

} else {

pred->next = (*x)->next

}

free(*x) /* free memory used by node */

}

The advantages of linked structures over static arrays include:

Both arrays and lists can be thought of as recursive objects:

An array is only recursive conceptually: Every array "contains" a sub-array. However, an array isn't recursive in code: A structure is recursive if while navigating through it, you come across "smaller" versions of the whole structure. While navigating through an array, you only come across the elements that the array holds, not smaller arrays.

push(x) — Inserts item x at the top of the stack.pop() — Return and remove the top item of the stack.enqueue(x) — Inserts item x at the back of the queue.dequeue() — Return and remove the front item from the queue.(key, value) pairs, such that each possible key appears at most once in the collection.put(key, value)remove(key)get(key)

typedef struct tree {

data_type data; /* Data field */

struct tree *parent; /* Pointer to parent */

struct tree *left; /* Pointer to left chid */

struct tree *right; /* Pointer to right child */

} tree;

priority.add() — an element with a given priority,delete(x) — an element,pull() — Get the the highest priority element and remove it, andpeek() — Get the the highest priority element without removing it.int hashcode = 0;

for (int i = 0; i < s.length(); i++) {

hashcode = (hashcode * 31) + s.charAt(i);

}

probing.| Operation | Average | Worst case | |-----------|---------|------------| | Search | | $O(n)$ | | Insert | | $O(n)$| | Delete | | $O(n)$ | 15. Space complexity is $O(n)$.

A common problem for compilers and text editors is determining whether the parentheses in a string are balanced and properly nested. For example, the string ((())())() contains properly nested pairs of parentheses, while the strings )()( and ()) do not. Give an algorithm that returns true if a string contains properly nested and balanced parentheses, and false if otherwise. For full credit, identify the position of the first offending parenthesis if the string is not properly nested and balanced.

fun test() {

System.out.println(areParentheseProperlyBalanced("((())())()"))

System.out.println(areParentheseProperlyBalanced(")()("))

System.out.println(areParentheseProperlyBalanced("())"))

System.out.println(areParentheseProperlyBalanced(")))"))

System.out.println(areParentheseProperlyBalanced("(("))

}

/**

* @return -1 if [string] is valid, else a positive integer

* that provides the position of the offending index.

*/

fun areParentheseProperlyBalanced(string: String): Int {

val stack = Stack<Pair<Char, Int>>()

string.forEachIndexed { index, char ->

if (char == '(') {

stack.push(char to index)

} else if (char == ')') {

if (stack.empty()) {

return index

}

stack.pop()

} else {

throw IllegalArgumentException("Only parenthesis are supported")

}

}

return if (stack.empty()) -1 else stack.peek().second

}

Give an algorithm that takes a string consisting of opening and closing parentheses, say )()(())()()))())))(, and finds the length of the longest balanced parentheses in , which is 12 in the example above. (Hint: The solution is not necessarily a contiguous run of parenthesis from $S$)

fun test() {

System.out.println(lengthOfLongestBalancedParentheses("((())())()"))

System.out.println(lengthOfLongestBalancedParentheses(")()(())()()))())))("))

System.out.println(lengthOfLongestBalancedParentheses(")()("))

System.out.println(lengthOfLongestBalancedParentheses("())"))

System.out.println(lengthOfLongestBalancedParentheses(")))"))

System.out.println(lengthOfLongestBalancedParentheses("(("))

}

fun lengthOfLongestBalancedParentheses(string: String): Int {

val stack = Stack<Char>()

var numBalancedParenthesis = 0

string.forEachIndexed { index, char ->

if (char == '(') {

stack.push(char)

} else if (char == ')') {

if (!stack.empty()) {

stack.pop()

numBalancedParenthesis++

}

}

}

// Multiplied by 2 because each balanced pair has a length of 2.

return numBalancedParenthesis * 2

}

Give an algorithm to reverse the direction of a given singly linked list. In other words, after the reversal all pointers should now point backwards. Your algorithm should take linear time.

fun test() {

val node1 = Node("Elvis", null)

val node2 = Node("Chidera", null)

node1.next = node2

val node3 = Node("Nnajiofor", null)

node2.next = node3

System.out.println(node1)

System.out.println(reverse(node1))

}

data class Node(

val element: String,

var next: Node?

)

/**

* Work through an example:

* reverse(Node1 -> Node2 -> Node3)

*

* Node1 level:

* val last = reverse(Node2 -> Node3) 🛑

*

* Node2 level:

* val last = reverse(Node3) 🛑

*

* Node3 level:

* return Node3

*

* Back to Node2 level:

* val last = Node3 🟢

* Node3.next = Node2

* Node2.next = null

* return last

*

* Back to Node1 level:

* val last = Node3 🟢

* Node2.next = Node1

* Node1.next = null

* return last

*/

fun reverse(node: Node): Node {

// Base case

if (node.next == null) return node

val last = reverse(node.next!!)

node.next!!.next = node

node.next = null

return last

}

Design a stack that supports S.push(x), S.pop(), and S.findmin(), which returns the minimum element of . All operations should run in constant time.

fun test() {

val stack = Stack()

stack.push(50)

stack.push(40)

stack.push(30)

stack.push(20)

stack.push(10)

System.out.println(stack.findMin())

stack.pop()

System.out.println(stack.findMin())

stack.pop()

System.out.println(stack.findMin())

stack.pop()

System.out.println(stack.findMin())

stack.pop()

System.out.println(stack.findMin())

stack.pop()

}

data class Element(

val num: Int,

internal val minNumSoFar: Int

)

class Stack {

private val list = mutableListOf<Element>()

fun push(num: Int) {

list += Element(

num = num,

minNumSoFar = Math.min(num, list.lastOrNull()?.minNumSoFar ?: num)

)

}

fun pop(): Int {

return list.removeLast().num

}

fun findMin(): Int {

return list.last().minNumSoFar

}

}

We have seen how dynamic arrays enable arrays to grow while still achieving constant-time amortized performance. This problem concerns extending dynamic arrays to let them both grow and shrink on demand.

a. Consider an underflow strategy that cuts the array size in half whenever the array falls below half full. Give an example sequence of insertions and deletions where this strategy gives a bad amortized cost.

b. Then, give a better underflow strategy than that suggested above, one that achieves constant amortized cost per deletion.

a. A sequence of insert, insert, delete, insert, delete, insert will lead to a bad amortized cost because the array will be busy resizing for most of the operations.

b. A better underflow strategy is to cut the array size in half whenever the array falls below . is arbitrary, but it gives the array good "slack" space. The goal is to select a number such that the array is not busy resizing for most of the operation. The smaller the cut-off ratio, the smaller the number of resizing but the more space the array uses.

Suppose you seek to maintain the contents of a refrigerator so as to minimize food spoilage. What data structure should you use, and how should you use it?

Use a priority queue. The expiry date is the priority of each element. Elements with the lowest expiry date are served first (minElement).

Work out the details of supporting constant-time deletion from a singly linked list as per the footnote from page 79, ideally to an actual implementation. Support the other operations as efficiently as possible.

fun test() {

val node3 = Node(3)

val node2 = Node(2, node3)

val node1 = Node(1, node2)

println(node1)

println(deleteInConstantTime(node1, node2))

println(deleteInConstantTime(node1, node3))

println(deleteInConstantTime(node1, node1))

}

fun deleteInConstantTime(head: Node, search: Node): Node? {

if (head == search) {

// Handle head case

return head.next

}

var node: Node? = head

var prevNode: Node? = null

while (node != null) {

if (node == search) {

if (node.next == null) {

// Handle tail case

prevNode?.next = null

} else {

node.element = node.next!!.element

node.next = node.next?.next

}

break

} else {

prevNode = node

node = node.next

}

}

return head

}

data class Node(

var element: Int,

var next: Node? = null

)

Tic-tac-toe is a game played on an board (typically n = 3$) where two players take consecutive turns placing “O” and “X” marks onto the board cells. The game is won if $n consecutive “O” or “X” marks are placed in a row, column, or diagonal. Create a data structure with space that accepts a sequence of moves, and reports in constant time whether the last move won the game.

fun test() {

var tickTacToe = TickTacToe(3)

assertFalse(tickTacToe.playX(1, 1))

assertFalse(tickTacToe.playO(1, 2))

assertFalse(tickTacToe.playX(1, 3))

tickTacToe = TickTacToe(3)

assertFalse(tickTacToe.playO(2, 1))

assertFalse(tickTacToe.playX(2, 2))

assertFalse(tickTacToe.playO(2, 3))

tickTacToe = TickTacToe(3)

assertFalse(tickTacToe.playX(3, 1))

assertFalse(tickTacToe.playX(3, 2))

assertTrue(tickTacToe.playX(3, 3))

tickTacToe = TickTacToe(3)

assertFalse(tickTacToe.playX(1, 1))

assertFalse(tickTacToe.playO(2, 1))

assertFalse(tickTacToe.playX(3, 1))

tickTacToe = TickTacToe(3)

assertFalse(tickTacToe.playO(1, 2))

assertFalse(tickTacToe.playX(2, 2))

assertFalse(tickTacToe.playO(3, 2))

tickTacToe = TickTacToe(3)

assertFalse(tickTacToe.playX(1, 3))

assertFalse(tickTacToe.playX(2, 3))

assertTrue(tickTacToe.playX(3, 3))

tickTacToe = TickTacToe(3)

assertFalse(tickTacToe.playO(1, 1))

assertFalse(tickTacToe.playO(2, 2))

assertTrue(tickTacToe.playO(3, 3))

tickTacToe = TickTacToe(3)

assertFalse(tickTacToe.playO(1, 3))

assertFalse(tickTacToe.playO(2, 2))

assertTrue(tickTacToe.playO(3, 1))

}

private fun assertTrue(value: Boolean) {

require(value)

println("Won!!!")

}

private fun assertFalse(value: Boolean) {

require(!value)

println("Not won yet")

}

class TickTacToe(private val n: Int) {

private val columns = Array(n) {

Slot(n)

}

private val rows = Array(n) {

Slot(n)

}

private val diagonal = Slot(n)

private val antiDiagonal = Slot(n)

fun playX(rowPosition: Int, columnPosition: Int): Boolean {

return play('X', rowPosition, columnPosition)

}

fun playO(rowPosition: Int, columnPosition: Int): Boolean {

return play('O', rowPosition, columnPosition)

}

private fun play(char: Char, rowPosition: Int, columnPosition: Int): Boolean {

return rows[rowPosition.toIndex].play(char) ||

columns[columnPosition.toIndex].play(char) ||

(rowPosition == columnPosition && diagonal.play(char)) ||

((rowPosition + columnPosition) == (n + 1) && antiDiagonal.play(char))

}

private val Int.toIndex get() = this - 1

class Slot(private val n: Int) {

private var number = 0

fun play(char: Char): Boolean {

val increment = if (char == 'X') 1 else -1

val target = if (char == 'X') n else -n

number += increment

return number == target

}

}

}

Write a function which, given a sequence of digits 2–9 and a dictionary of words, reports all words described by this sequence when typed in on a standard telephone keypad. For the sequence 269 you should return any, box, boy, and cow, among other words.

fun test() {

println(words(arrayOf(2, 6, 9)))

println(words(arrayOf(7, 6, 7, 7)))

}

fun words(inputDigits: Array<Int>): List<String> {

val words = setOf(

"pops",

"any",

"box",

"boy",

"cow",

"dad",

"mom",

)

val charToDigitMapping = mapOf(

'a' to 2,

'b' to 2,

'c' to 2,

'd' to 3,

'e' to 3,

'f' to 3,

'g' to 4,

'h' to 4,

'i' to 4,

'j' to 5,

'k' to 5,

'l' to 5,

'm' to 6,

'n' to 6,

'o' to 6,

'p' to 7,

'q' to 7,

'r' to 7,

's' to 7,

't' to 8,

'u' to 8,

'v' to 8,

'w' to 9,

'x' to 9,

'y' to 9,

'z' to 9,

)

val matchingWords = mutableListOf<String>()

words.forEach { word ->

word.forEachIndexed { index, char ->

val charDigit = charToDigitMapping[char] ?: return@forEach

val inputDigitAtIndex = inputDigits.getOrNull(index) ?: return@forEach

if (charDigit != inputDigitAtIndex) {

return@forEach

}

}

matchingWords += word

}

return matchingWords

}

Two strings and are anagrams if the letters of can be rearranged to form . For example, silent/listen, and incest/insect are anagrams. Give an efficient algorithm to determine whether strings and are anagrams.

fun test() {

println(isAnagram("silent", "listen"))

println(isAnagram("silence", "listen"))

println(isAnagram("incest", "insect"))

println(isAnagram("incest", "insects"))

}

fun isAnagram(s1: String, s2: String): Boolean {

val map = mutableMapOf<Char, Int>()

s1.forEach { char ->

map[char] = map.getOrPut(char) { 0 } + 1

}

s2.forEach { char ->

if (map.containsKey(char)) {

map[char] = map.getValue(char) - 1

} else {

return false

}

}

map.values.forEach { number ->

if (number != 0) {

return false

}

}

return true

}

Design a dictionary data structure in which search, insertion, and deletion can all be processed in time in the worst case. You may assume the set elements are integers drawn from a finite set and initialization can take time.

fun test() {

val dictionary = Dictionary(10)

dictionary.insert(4)

println(dictionary.search(4))

dictionary.delete(4)

println(dictionary.search(4))

}

class Dictionary(val capacity: Int) {

private val array = Array<Int?>(capacity) { null }

fun search(element: Int): Int? {

return array[element.toIndex]

}

fun delete(element: Int) {

array[element.toIndex] = null

}

fun insert(element: Int) {

array[element.toIndex] = element

}

private val Int.toIndex get() = this - 1

}

The maximum depth of a binary tree is the number of nodes on the path from the root down to the most distant leaf node. Give an algorithm to find the maximum depth of a binary tree with nodes.

fun test() {

val root = BNode(

element = 5,

left = BNode(

element = 3,

left = BNode(

element = 2,

left = BNode(

element = 1,

left = null,

right = null

),

right = null

),

right = null

),

right = BNode(

element = 7,

left = null,

right = BNode(

element = 8,

left = null,

right = null

)

)

)

println(maxDepth(root))

}

fun maxDepth(root: BNode?): Int {

if (root == null) {

return 0

}

val leftDepth = maxDepth(root.left)

val rightDepth = maxDepth(root.right)

return max(leftDepth, rightDepth) + 1

}

Two elements of a binary search tree have been swapped by mistake. Give an algorithm to identify these two elements so they can be swapped back.

fun test() {

val root = BNode(

element = 5,

left = BNode(

element = 3,

left = BNode(

element = 8, // Swapped

left = null,

right = null

),

right = null

),

right = BNode(

element = 7,

left = null,

right = BNode(

element = 2, // Swapped

left = null,

right = null

)

)

)

println(root)

val recoverer = SwappedNodeRecoverer()

recoverer.recover(root)

println(root)

}

class SwappedNodeRecoverer {

private var prev: BNode? = null

private var first: BNode? = null

private var second: BNode? = null

fun recover(root: BNode?) {

recoverInternal(root)

if (first != null) {

val firstElement = first!!.element

first!!.element = second!!.element

second!!.element = firstElement

}

}

private fun recoverInternal(root: BNode?) {

if (root == null) {

return

}

recoverInternal(root.left)

if (prev != null && root.element < prev!!.element) {

if (first == null) {

// This handles adjacent node case

first = prev

second = root

} else {

second = root

}

}

prev = root

recoverInternal(root.right)

}

}

data class BNode(

var element: Int,

var left: BNode? = null,

var right: BNode? = null,

)

Given two binary search trees, merge them into a doubly linked list in sorted order.

fun test() {

val s1 = BNode(

element = 5,

left = BNode(

element = 3,

left = null,

right = null

),

right = BNode(

element = 7,

left = null,

right = null

)

)

val s2 = BNode(

element = 10,

left = BNode(

element = 8,

left = null,

right = null

),

right = BNode(

element = 12,

left = null,

right = null

)

)

println(merge(s1, s2))

}

fun merge(tree1: BNode, tree2: BNode): Node {

val tree1Nodes = toList(tree1)

val tree2Nodes = toList(tree2)

var i1 = 0

var i2 = 0

val list = Node(Integer.MIN_VALUE) // Sentinel

var lastListNode = list

val addListNode = { node: Node ->

lastListNode.next = node

lastListNode = node

}

while (i1 < tree1Nodes.size || i2 < tree2Nodes.size) {

val tree1Node = tree1Nodes.getOrNull(i1)

val tree2Node = tree2Nodes.getOrNull(i2)

if (tree1Node == null && tree2Node != null) {

addListNode(Node(tree2Node.element))

i2++

} else if (tree2Node == null && tree1Node != null) {

addListNode(Node(tree1Node.element))

i1++

} else if (tree1Node!!.element < tree2Node!!.element) {

addListNode(Node(tree1Node.element))

i1++

} else if(tree1Node.element > tree2Node.element) {

addListNode(Node(tree2Node.element))

i2++

} else {

addListNode(Node(tree1Node.element))

i1++

addListNode(Node(tree2Node.element))

i2++

}

}

return list.next!!

}

fun toList(tree: BNode?): MutableList<BNode> {

if (tree == null) {

return mutableListOf()

}

return (toList(tree.left) + tree + toList(tree.right)).toMutableList()

}

data class BNode(

val element: Int,

var left: BNode?,

var right: BNode?,

)

data class Node(

val element: Int,

var next: Node? = null

)

Describe an $O(n)$-time algorithm that takes an $n$-node binary search tree and constructs an equivalent height-balanced binary search tree. In a height-balanced binary search tree, the difference between the height of the left and right subtrees of every node is never more than 1.

fun test() {

val root = BNode(

element = 5,

left = BNode(

element = 3,

left = BNode(

element = 2,

left = BNode(

element = 1,

left = null,

right = null

),

right = null

),

right = null

),

right = BNode(

element = 7,

left = null,

right = BNode(

element = 8,

left = null,

right = null

)

)

)

println("Unbalanced tree: $root")

println("Balanced tree: " + toBalancedTree(root))

}

fun toBalancedTree(root: BNode): BNode {

val nodes = toList(root)

return sortedListToBinaryTree(nodes)!!

}

fun sortedListToBinaryTree(list: List<BNode>): BNode? {

if (list.isEmpty()) {

return null

}

val mid = list.size / 2

val root = BNode(list[mid].element)

root.left = sortedListToBinaryTree(list.slice(0 until mid))

root.right = sortedListToBinaryTree(list.slice((mid + 1) until list.size))

return root

}

fun toList(tree: BNode?): MutableList<BNode> {

if (tree == null) {

return mutableListOf()

}

return (toList(tree.left) + tree + toList(tree.right)).toMutableList()

}

data class BNode(

val element: Int,

var left: BNode? = null,

var right: BNode? = null,

)

Find the storage efficiency ratio (the ratio of data space over total space) for each of the following binary tree implementations on nodes:

Storage efficiency ratio = For option A: Space taken by a single node = (2 pointers * 4 bytes) + (1 pointer * 4 bytes) + (4 bytes) = 16 bytes Given nodes Storage efficiency ratio =

For option B: Space taken by a single internal node = 2 pointers * 2 bytes = 4 bytes Space taken by a single leaf node = 4 bytes In a full tree, given leaf nodes, there are internal nodes. Storage efficiency ratio = (As gets larger, the constant doesn't matter, hence why it was dropped)

Option B has a higher storage efficiency to option A.

Give an algorithm that determines whether a given $n$-node binary tree is height-balanced (see Problem 3-15).

fun test() {

val root = BNode(

element = 5,

left = BNode(

element = 3,

left = BNode(

element = 2,

left = BNode(

element = 1,

left = null,

right = null

),

right = null

),

right = null

),

right = BNode(

element = 7,

left = null,

right = BNode(

element = 8,

left = null,

right = null

)

)

)

println(isBalanced(root))

}

fun isBalanced(root: BNode?): Pair<Boolean, Int> {

if (root == null) {

return true to 0

}

val (isLeftBalanced, leftHeight) = isBalanced(root.left)

if (!isLeftBalanced) {

return false to 0

}

val (isRightBalanced, rightHeight) = isBalanced(root.right)

if (!isRightBalanced) {

return false to 0

}

if (abs(leftHeight - rightHeight) > 1) {

return false to 0

}

return true to (max(leftHeight, rightHeight) + 1)

}

data class BNode(

val element: Int,

val left: BNode?,

val right: BNode?,

)

Describe how to modify any balanced tree data structure such that search, insert, delete, minimum, and maximum still take time each, but successor and predecessor now take time each. Which operations have to be modified to support this?

TODO

Suppose you have access to a balanced dictionary data structure that supports each of the operations search, insert, delete, minimum, maximum, successor, and predecessor in time. Explain how to modify the insert and delete operations so they still take but now minimum and maximum take time. (Hint: think in terms of using the abstract dictionary operations, instead of mucking about with pointers and the like.)

Use two variables to maintain the maximum and minimum element. On:

Insert(x): If , set as new minimum; If , set as new maximum.Delete(x): If , set minimum = sucessor(x)$; If $x = maximum, set .Design a data structure to support the following operations:

insert(x,T) – Insert item into the set .delete(k,T) – Delete the k$th smallest element from $T.member(x,T) – Return true iff .All operations must take time on an $n$-element set.

g

A concatenate operation takes two sets and , where every key in is smaller than any key in , and merges them. Give an algorithm to concatenate two binary search trees into one binary search tree. The worst-case running time should be , where is the maximal height of the two trees.

fun test() {

val s1 = BNode(

element = 5,

left = BNode(

element = 3,

left = null,

right = null

),

right = BNode(

element = 7,

left = null,

right = null

)

)

val s2 = BNode(

element = 10,

left = BNode(

element = 8,

left = null,

right = null

),

right = BNode(

element = 12,

left = null,

right = null

)

)

println(concat(s1, s2))

}

fun concat(s1: BNode, s2: BNode): BNode {

val s1RightMostNode = rightMostNode(s1)

s1RightMostNode.right = s2

return s1

}

fun rightMostNode(node: BNode): BNode {

if (node.right == null) {

return node

}

return rightMostNode(node.right!!)

}

data class BNode(

val element: Int,

var left: BNode?,

var right: BNode?,

)

Design a data structure that supports the following two operations:

insert(x) – Insert item from the data stream to the data structure.median() – Return the median of all elements so far.All operations must take time on an $n$-element set.

fun test() {

val ds = DataStructure()

ds.insert(5)

ds.insert(2)

ds.insert(3)

println("Median: ${ds.median()}")

ds.insert(4)

println("Median: ${ds.median()}")

}

class DataStructure {

/* The head of this queue is the least element with respect to the specified ordering. */

private val minHeap = PriorityQueue<Int>() // Represents the upper half

private val maxHeap = PriorityQueue<Int>() // Represents the lower half

fun insert(x: Int) {

if (maxHeap.isEmpty() || x <= -maxHeap.peek()) {

maxHeap.offer(-x)

} else {

minHeap.offer(x)

}

// Balance the heaps to ensure the size difference is at most 1

if (maxHeap.size > minHeap.size + 1) {

minHeap.offer(-maxHeap.poll())

} else if (minHeap.size > maxHeap.size) {

maxHeap.offer(-minHeap.poll())

}

}

fun median(): Double {

return if (minHeap.size == maxHeap.size) {

// If the number of elements is even, take the average of the middle two

(minHeap.peek() + (-maxHeap.peek())) / 2.0

} else {

-maxHeap.peek().toDouble()

}

}

}

Assume we are given a standard dictionary (balanced binary search tree) defined on a set of strings, each of length at most . We seek to print out all strings beginning with a particular prefix . Show how to do this in time, where is the number of strings.

g

An array is called k$-unique if it does not contain a pair of duplicate elements within $k positions of each other, that is, there is no and such that and . Design a worst-case algorithm to test if is $k$-unique.

g

In the bin-packing problem, we are given objects, each weighing at most 1 kilogram. Our goal is to find the smallest number of bins that will hold the objects, with each bin holding 1 kilogram at most.

g

Suppose that we are given a sequence of values and seek to quickly answer repeated queries of the form: given and , find the smallest value in . a. Design a data structure that uses space and answers queries in time. b. Design a data structure that uses space and answers queries in time. For partial credit, your data structure can use space and have query time.

fun test() {

val numbers1 = listOf(1, 3, 9, 2, 5)

println(solutionA(numbers1, 2, 5))

println(solutionA(numbers1, 1, 3))

println(solutionB(numbers1, 2, 5))

println(solutionB(numbers1, 1, 3))

}

fun solutionA(numbers: List<Int>, i: Int, j: Int): Int? {

val list = Array<Array<Int?>>(numbers.size) { Array(numbers.size) { null } }

for (p in numbers.indices) {

var minimumSoFar = Int.MAX_VALUE

for (q in (p+1) until numbers.size) {

val qthNumber = numbers[q]

if (qthNumber < minimumSoFar) {

minimumSoFar = qthNumber

}

list[p][q] = minimumSoFar

}

}

return list[i-1][j-1]

}

fun solutionB(numbers: List<Int>, i: Int, j: Int): Int? {

TODO()

}

Suppose you are given an input set of integers, and a black box that if given any sequence of integers and an integer instantly and correctly answers whether there is a subset of the input sequence whose sum is exactly . Show how to use the black box times to find a subset of that adds up to .

fun test() {

println(findSubset(listOf(3, 5, 8, 2, 1), 6))

println(findSubset(listOf(2, 3, 5, 8, 6, 4), 20))

}

fun findSubset(s: List<Int>, k: Int): List<Int>? {

if (!blackBox(s, k)) return null

var subset = s

for (i in s.indices) {

val subsetWithoutIthNumber = subset.filter { it != s[i] }

if (blackBox(subsetWithoutIthNumber, k)) {

subset = subsetWithoutIthNumber

}

}

return subset

}

fun blackBox(integers: List<Int>, k: Int): Boolean {

// We were not told to implement the black box, so we will hack it for the tests

return if (k == 6) {

integers.containsAll(listOf(1, 2, 3))

} else return if (k == 20) {

integers.containsAll(listOf(2, 8, 6, 4)) || integers.containsAll(listOf(3, 5, 8, 4))

} else {

throw IllegalArgumentException()

}

}

Let be an array of real numbers. Design an algorithm to perform any sequence of the following operations:

Add(i,y) – Add the value to the $i$th number.Partial-sum(i) – Return the sum of the first numbers, that is, .There are no insertions or deletions; the only change is to the values of the numbers. Each operation should take steps. You may use one additional array of size as a work space.

g

Extend the data structure of the previous problem to support insertions and deletions. Each element now has both a ''key'' and a ''value''. An element is accessed by its key, but the addition operation is applied to the values. The ''Partial_sum'' operation is different.

Add(k,y) – Add the value to the item with key .Insert(k,y) – Insert a new item with key and value .Delete(k) – Delete the item with key .Partial-sum(k) – Return the sum of all the elements currently in the set whose key is less than , that is, .The worst-case running time should still be for any sequence of operations.

g

You are consulting for a hotel that has one-bed rooms. When a guest checks in, they ask for a room whose number is in the range . Propose a data structure that supports the following data operations in the allotted time:

Initialize(n): Initialize the data structure for empty rooms numbered , in polynomial time.Count(l, h): Return the number of available rooms in , in time.Checkin(l, h): In time, return the first empty room in and mark it occupied, or return NIL if all the rooms in are occupied.Checkout(x): Mark room as unoccupied, in time.fun test() {

val ds = DataStructure()

ds.initialize(10)

println(ds.root)

println("Available in [2, 5]: ${ds.count(2, 5)}")

val checkIn1 = ds.checkIn(2, 5)

println("Available in [2, 5]: ${ds.count(2, 5)}")

val checkIn2 = ds.checkIn(2, 5)

println("Available in [2, 5]: ${ds.count(2, 5)}")

ds.checkOut(checkIn2!!)

println("Available in [2, 5]: ${ds.count(2, 5)}")

ds.checkOut(checkIn2)

ds.checkOut(checkIn1!!)

println("Available in [2, 5]: ${ds.count(2, 5)}")

}

class DataStructure() {

lateinit var root: BNode

fun initialize(n: Int) {

root = sortedListToBinaryTree((1..n).toList())!!

}

fun sortedListToBinaryTree(list: List<Int>): BNode? {

if (list.isEmpty()) {

return null

}

val mid = list.size / 2

val root = BNode(list[mid])

root.left = sortedListToBinaryTree(list.slice(0 until mid))

root.right = sortedListToBinaryTree(list.slice((mid + 1) until list.size))

return root

}

fun count(l: Int, h: Int): Int {

return countInRange(l, h, root)

}

fun checkIn(l: Int, h: Int): Int? {

val first = findFirstInRange(l, h, root)

first?.isCheckedIn = true

return first?.element

}

fun checkOut(x: Int) {

find(x, root)?.isCheckedIn = false

}

fun countInRange(l: Int, h: Int, node: BNode?): Int {

if (node == null) {

return 0

}

val count = if (node.element in l..h && !node.isCheckedIn) 1 else 0

return if (node.element in l..h) {

count + countInRange(l, h, node.left) + countInRange(l, h, node.right)

} else if (node.element < l) {

count + countInRange(l, h, node.right)

} else {

count + countInRange(l, h, node.left)

}

}

fun findFirstInRange(l: Int, h: Int, node: BNode?): BNode? {

if (node == null) {

return null

}

if (node.element in l..h && !node.isCheckedIn) {

return node

}

return if (node.element < l) {

findFirstInRange(l, h, node.right)

} else if (node.element > h) {

findFirstInRange(l, h, node.left)

} else {

findFirstInRange(l, h, node.left) ?: findFirstInRange(l, h, node.right)

}

}

fun find(x: Int, node: BNode?): BNode? {

if (node == null) {

return null

}

return if (node.element == x) {

node

} else if (node.element < x) {

find(x, node.right)

} else {

find(x, node.left)

}

}

}

data class BNode(

val element: Int,

var isCheckedIn: Boolean = false,

var left: BNode? = null,

var right: BNode? = null,

)

Design a data structure that allows one to search, insert, and delete an integer in time (i.e., constant time, independent of the total number of integers stored). Assume that and that there are units of space available, where is the maximum number of integers that can be in the table at any one time. (Hint: use two arrays and .) You are not allowed to initialize either or , as that would take or operations. This means the arrays are full of random garbage to begin with, so you must be very careful.

fun test() {

val ds = DataStructure(m = 5, n = 10)

ds.insert(7)

ds.insert(3)

ds.insert(6)

ds.insert(5)

ds.insert(9)

println(ds.search(6))

println(ds.search(9))

ds.delete(6)

ds.delete(9)

println(ds.search(6))

println(ds.search(9))

}

class DataStructure(

val m: Int,

val n: Int

) {

val indices = arrayOfNulls<Int>(n + 1)

val values = arrayOfNulls<Int>(m + 1)

var k = 0

fun insert(x: Int) {

k++

indices[x] = k

values[k] = x

}

fun search(x: Int): Int? {

val index = indices[x] ?: return null

return values[index]

}

fun delete(x: Int) {

val xIndex = indices[x] ?: return

if (k == 0) return

val lastValue = values[k]!!

indices[lastValue] = xIndex

values[xIndex] = lastValue

values[k] = null

indices[x] = null

k--

}

}

What method would you use to look up a word in a dictionary?

Use a hash table: Store the word as the key and the definition as the value.

Imagine you have a closet full of shirts. What can you do to organize your shirts for easy retrieval?

Sort them based on specific property like color.

Write a function to find the middle node of a singly linked list.

fun test() {

val node1 = Node("Elvis", null)

val node2 = Node("Chidera", null)

node1.next = node2

val node3 = Node("Nnajiofor", null)

node2.next = node3

val node4 = Node("Jollof", null)

node3.next = node4

val node5 = Node("List", null)

node4.next = node5

println(middleElement(node1))

}

data class Node(

val element: String,

var next: Node?

)

fun middleElement(head: Node): Node {

var hare: Node? = head

var tortoise: Node = head

while (hare?.next != null) {

hare = hare.next?.next

tortoise = tortoise.next!!

}

return tortoise

}

Write a function to determine whether two binary trees are identical. Identical trees have the same key value at each position and the same structure.

fun test() {

val s1 = BNode(

element = 5,

left = BNode(

element = 3,

left = null,

right = null

),

right = BNode(

element = 7,

left = null,

right = null

)

)

val s2 = BNode(

element = 10,

left = BNode(

element = 8,

left = null,

right = null

),

right = BNode(

element = 12,

left = null,

right = null

)

)

println(isIdentical(s1, s2))

println(isIdentical(s1, s1))

println(isIdentical(s2, s2))

}

fun isIdentical(tree1: BNode?, tree2: BNode?): Boolean {

if (tree1 == null || tree2 == null) {

return tree1 == tree2

}

return tree1.element == tree2.element &&

isIdentical(tree1.left, tree2.left) &&

isIdentical(tree1.right, tree2.right)

}

data class BNode(

val element: Int,

var left: BNode?,

var right: BNode?,

)

Write a program to convert a binary search tree into a linked-list.

See Problem 14: Part of the solution involves converting a tree into a linked-list.

Implement an algorithm to reverse a linked list. Now do it without recursion.

fun test() {

val node1 = Node("Elvis", null)

val node2 = Node("Chidera", null)

node1.next = node2

val node3 = Node("Nnajiofor", null)

node2.next = node3

println(reverse(node1))

}

fun reverse(head: Node): Node {

var currentNode: Node? = head

var previousNode: Node? = null

while (currentNode != null) {

val nextNode = currentNode.next

currentNode.next = previousNode

previousNode = currentNode

currentNode = nextNode

}

return previousNode!!

}

data class Node(

val element: String,

var next: Node?

)

What is the best data structure for maintaining URLs that have been visited by a web crawler? Give an algorithm to test whether a given URL has already been visited, optimizing both space and time.

A dictionary that acts as a set:

You are given a search string and a magazine. You seek to generate all the characters in the search string by cutting them out from the magazine. Give an algorithm to efficiently determine whether the magazine contains all the letters in the search string.

fun test() {

val node1 = Node("Elvis", null)

println(containsAllCharacters("Elvis", "The Elfs are very happy"))

println(containsAllCharacters("Elvis", "The Elfs are very happy because it is christmas"))

}

fun containsAllCharacters(string: String, magazine: String): Boolean {

val map = mutableMapOf<Char, Int>()

string.forEach { char ->

map[char] = map.getOrDefault(char, 0) + 1

}

var matched = 0

magazine.forEach { char ->

val count = map[char] ?: 0

if (count > 0) {

matched++

map[char] = count - 1

}

if (matched == string.length) {

return true

}

}

return false

}

Reverse the words in a sentence—that is, “My name is Chris” becomes “Chris is name My.” Optimize for time and space.

fun test() {

println(reverse("My name is Chris"))

}

fun reverse(sentence: String): String {

val words = sentence.split(" ")

var reversedSentence = ""

var i = words.lastIndex

while (i >= 0) {

val word = words[i]

reversedSentence += word

i--

if (i >= 0) {

reversedSentence += " "

}

}

return reversedSentence

}

Determine whether a linked list contains a loop as quickly as possible without using any extra storage. Also, identify the location of the loop.

fun test() {

val node1 = Node("Elvis", null)

val node2 = Node("Chidera", null)

node1.next = node2

val node3 = Node("Nnajiofor", null)

node2.next = node3

val node4 = Node("Jollof", null)

node3.next = node4

val node5 = Node("List", null)

node4.next = node5

node5.next = node3

println(detectLoopLocation(node1)?.element)

}

data class Node(

val element: String,

var next: Node?

)

/**

* https://en.wikipedia.org/wiki/Cycle_detection#:~:text=Floyd's%20cycle%2Dfinding%20algorithm%20is,The%20Tortoise%20and%20the%20Hare.

*/

fun detectLoopLocation(head: Node): Node? {

var hare: Node? = head

var tortoise: Node? = head

while (hare != null) {

hare = hare.next?.next

tortoise = tortoise?.next

if (hare == tortoise) {

break

}

}

if (head.next != null && hare == tortoise) {

hare = head

while (hare != tortoise) {

hare = hare?.next

tortoise = tortoise?.next

}

return tortoise!!

}

return null

}

You have an unordered array of integers. Find the array containing elements where is the product of all integers in except for . You may not use division. You can use extra memory. (Hint: there are solutions faster than .)

fun test() {

println(transform(arrayOf(3, 5, 4)).toList())

println(transform(arrayOf(2, 3, 4, 5, 6)).toList())

}

fun transform(x: Array<Int>): Array<Int> {

val prefixProducts = Array(x.size) { 0 }

val suffixProducts = Array(x.size) { 0 }

var prefixProduct = 1

x.forEachIndexed { i, xi ->

prefixProducts[i] = prefixProduct

prefixProduct *= xi

}

var suffixProduct = 1

var i = x.lastIndex

while (i >= 0) {

val xi = x[i]

suffixProducts[i] = suffixProduct

suffixProduct *= xi

i--

}

return Array(x.size) {

prefixProducts[it] * suffixProducts[it]

}

}

Give an algorithm for finding an ordered word pair (e.g. “New York”) occurring with the greatest frequency in a given webpage. Which data structures would you use? Optimize both time and space.

fun test() {

println(findOrderedWordPairWithMaxFrequency("New york is a great city. I love new york."))

println(findOrderedWordPairWithMaxFrequency("My name is Elvis Chidera. Elvis Chidera is me."))

}

/*

Punctuation marks can mess up the algorithm. Only "." is handled.

*/

fun findOrderedWordPairWithMaxFrequency(text: String): Pair<String, String> {

val words = text.replace(".", "").split(" ")

if (words.size <= 1) throw IllegalArgumentException("Text is empty or only has one word")

val wordPairs = mutableMapOf<Pair<String, String>, Int>()

var i = 1

while (i < words.size) {

val previousWord = words[i - 1]

val currentWord = words[i]

val wordPair = previousWord to currentWord

wordPairs[wordPair] = wordPairs.getOrDefault(wordPair, 1) + 1

i++

}

var maxFrequency = 0

var wordPairWithMaxFrequency: Pair<String, String>? = null

wordPairs.forEach { (wordPair, frequency) ->

if (frequency > maxFrequency) {

maxFrequency = frequency

wordPairWithMaxFrequency = wordPair

}

}

return wordPairWithMaxFrequency!!

}

This chapter discusses sorting, algorithm design paradigms espoused by sorting algorithms and how sorting can be applied in solving other problems.

The first algorithm design paradigm is the application of sorting as an initial step to simplify another computational problem. e.g.

Pairwise comparison (i.e. is or or to $b$) is typical abstracted away into a comparison function that is then passed to the sorting algorithm. This allows the sorting algorithm to be independent of application-specific comparison concerns like:

An example comparison function:

/**

* Returns a negative integer if `e1` is less than `e2`.

* Returns zero if `e1` is equal `e2`.

* Returns a positive integer if `e1` is greater than `e2`.

*/

fun compare(e1: Element, e2: Element): Int {

}

Stable sorting algorithms maintain the relative order of elements with equal keys. That is, a sorting algorithm is stable if whenever there are two elements and with the same key and with appearing before in the original list, will appear before in the sorted list.

Few fast algorithms are naturally stable. Stability can be achieved for any sorting algorithm by adding the initial position as a secondary key.

When equal elements are indistinguishable, such as with integers, or more generally, any data where the entire element is the key, stability is not an issue.

A heap is a tree-based data structure that satisfies the heap property:

The heap is one maximally efficient implementation of a priority queue ADT. In a heap, the highest (or lowest) priority element is always stored at the root. A heap is a useful data structure when it is necessary to repeatedly remove the object with the highest (or lowest) priority.

However, a heap is not a sorted structure; it can be regarded as being partially ordered: there is no implied ordering between siblings or cousins and no implied sequence for an in-order traversal. The heap relation mentioned above applies only between nodes and their parents, grandparents, etc.

Heaps are usually implemented with an array:

For a binary heap, in the array:

This can be generalized into:

Given a node at index ,

Its left child is at index

Its right child is at index

And its parent is at index

This simple indexing scheme makes it efficient to move "up" or "down" the tree.

This implicit structure trades space efficiency for flexibility. e.g. With pointers, we can move subtrees around by changing a single pointer.

To avoid potentially requiring a array to store an n$-element heap, it's required that the binary heap is complete with its element inserted from left to right. This structural constraint allows us to store the $n$-element heap with the first $n indices of the array. When a heap is a complete binary tree, it has the smallest possible height — a heap with nodes and branches for each node always has height.

/**

* Put elements of [array] in heap order, in-place.

*

* Consider the array in reverse order, starting from the last (nth) position. It represents a leaf of the tree and

* so trivial obeys the heap property (i.e. it's larger than its non-existent children). The same is the case for

* the last n/2 positions in the array, because all are leaves.

*

* If we continue to walk backwards through the array we will eventually encounter an internal node with children.

* This element may not obey the heap property (because it might be smaller than its children),

* but its children represent well-formed (if small) heaps. This situation is exactly what [siftDown] was designed

* to do: given a "damaged" binary heap, where the max-heap property (no child is greater than its parent) holds

* everywhere except possibly between the root node and its children, repair it to produce an undamaged heap.

*

* To see that this takes O(n) time, count the worst-case number of [siftDown] iterations.

* The last half of the array requires zero iterations, the preceding quarter requires at most one iteration,

* the eighth before that requires at most two iterations, the sixteenth before that requires at most three, and so on.

*/

private fun heapify(array: ArrayList<Int>) {

// Start is initialized to the first leaf node

// Find the parent of the last element.

var start = iParent(array.lastIndex) + 1

while (start > 0) {

// Go to the last non-heap node

start -= 1

// Sift down the node at index 'start' to the proper place such that all nodes below the start index are in heap order

siftDown(array, start, array.lastIndex)

}

// After sifting down the root all nodes/elements are in heap order.

}

private fun siftUp(array: ArrayList<Int>, index: Int) {

var index = index

while (index > 0) {

val parentIndex = iParent(index)

if (array[parentIndex] < array[index]) {

swap(array, parentIndex, index)

index = parentIndex

} else {

return

}

}

}

/**

* Repair the heap whose root element is at index 'start', assuming the heaps rooted at its children are valid.

*

* The number of iterations in any one [siftDown] call is bounded by the height of the tree, which is ⌊log2 n⌋ = O(log n).

*/

fun siftDown(array: ArrayList<Int>, rootIndex: Int, endIndex: Int) {

var rootIndex = rootIndex

while (iLeftChild(rootIndex) <= endIndex) { // While the root has at least one child

var biggerChildIndex = iLeftChild(rootIndex)

// If there is right child and that child is greater

if (iRightChild(rootIndex) <= endIndex && array[biggerChildIndex] < array[iRightChild(rootIndex)]) {

biggerChildIndex = iRightChild(rootIndex)

}

if (array[rootIndex] < array[biggerChildIndex]) {

swap(array, rootIndex, biggerChildIndex)

rootIndex = biggerChildIndex

// Repeat to continue sifting down the child now.

} else {

// The root holds the largest element. Since we may assume the heaps rooted at the children are valid,

// this means that we are done.

return

}

}

}

private fun swap(array: ArrayList<Int>, i: Int, j: Int) {

val temp = array[i]

array[i] = array[j]

array[j] = temp

}

fun iParent(i: Int): Int {

return floor(i / 2.0).toInt()

}

fun iLeftChild(i: Int): Int {

return (i * 2) + 1

}

fun iRightChild(i: Int): Int {

return (i * 2) + 2

}

data class Heap(private val array: ArrayList<Int>) {

init {

heapify(array)

}

/**

* Add the new element at the end of the heap, in the first available free space.

* If this violates the heap property, sift up the new element until the heap property has been reestablished.

* Since the heap is balanced, the height is $log_2 n$ and hence each insertion costs at most $O(log_2 n)$.

*/

fun insert(element: Int) {

array.add(element)

siftUp(array, array.lastIndex)

}

/**

* Remove the root and insert the last element of the heap in the root.

* If this violates the heap property, sift down the new root to reestablish the heap property.

*/

fun pop(): Int {

val root = array[0]

val lastChild = array.removeLast()

array[0] = lastChild

siftDown(array, 0, array.lastIndex)

return root

}

/**

* Remove the root and put the new element in the root and sift down.

* When compared to pop followed by insert, this avoids a sift up step.

*/

fun replaceRoot(element: Int) {

array[0] = element

siftDown(array, 0, array.lastIndex)

}

}

Heapsort is an in-place comparison-based sorting algorithm which can be thought of as "an implementation of selection sort using the right data structure." Like selection sort, heapsort divides its input into a sorted and an unsorted region, and it iteratively shrinks the unsorted region by extracting the largest element from it and inserting it into the sorted region. Unlike selection sort, heapsort does not waste time with a linear-time scan of the unsorted region; rather, heap sort maintains the unsorted region in a heap data structure to efficiently find the largest element in each step.

Although somewhat slower in practice on most machines than a well-implemented quicksort, it has the advantages of very simple implementation and a more favorable worst-case runtime. Most real-world quicksort variants include an implementation of heapsort as a fallback should they detect that quicksort is becoming degenerate.

Heapsort is an example of the second algorithm design paradigm: Use an appropriate data structure.

/**

* The steps are:

*

* 1. Call the [heapify] function on the array. This builds a heap from an array in O(n) operations.

* 2. Swap the first element of the array (the largest element in the heap) with the final element of the heap. Decrease the considered range of the heap by one.

* 3. Call the [siftDown] function on the array to move the new first element to its correct place in the heap.

* 4. Go back to step (2) until the remaining array is a single element.

*

* The [heapify] operation is run once, and is O(n) in performance.

* The [siftDown] function is called n times and requires O(log n) work each time, due to its traversal starting from the root node.

* Therefore, the performance of this algorithm is O(n + n log n) = O(n log n).

*/

fun heapsort(array: ArrayList<Int>): ArrayList<Int> {

// Build the heap in the array so that largest value is at the root

heapify(array)

var endIndex = array.lastIndex

// The following loop maintains the invariants that every element between 0 to endIndex is a heap, and

// every element beyond endIndex is greater than everything before it (in sorted order).

while (endIndex > 0) {

// [0] is the root and largest value. The swap moves it in front of the sorted elements.

swap(array, 0, endIndex)

// The heap size is reduced by one.

endIndex--

// The swap ruined the heap property, so restore it.

siftDown(array, 0, endIndex)

}

return array

}